Event JSON

{

"id": "f393f7b2a6bdbd8cac37a7b78eaa9f155954bb2377e18581f1cf5f17d39053dc",

"pubkey": "a3af17104f91f7f9b5667b14717d1d434931195e4c6e075b7dc13d8ed71bc46f",

"created_at": 1713279308,

"kind": 1,

"tags": [

[

"proxy",

"https://mastodon.social/users/arstechnica/statuses/112281472739015685",

"activitypub"

],

[

"L",

"pink.momostr"

],

[

"l",

"pink.momostr.activitypub:https://mastodon.social/users/arstechnica/statuses/112281472739015685",

"pink.momostr"

]

],

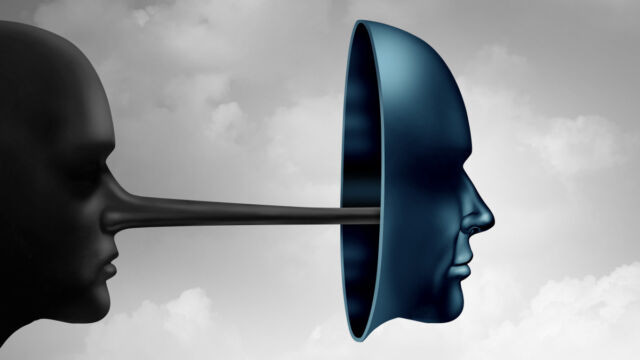

"content": "UK seeks to criminalize creation of sexually explicit AI deepfake images without consent\n\nUnder new law, those who create the \"horrific images\" would face a fine and possible jail time.\n\nhttps://arstechnica.com/information-technology/2024/04/uk-seeks-to-criminalize-creation-of-sexually-explicit-ai-deepfake-images-without-consent/?utm_brand=arstechnica\u0026utm_social-type=owned\u0026utm_source=mastodon\u0026utm_medium=social\nhttps://files.mastodon.social/media_attachments/files/112/281/472/693/069/740/original/da38134c5cafc9ab.jpg\n",

"sig": "cbeff4838c7e5189844a71ef15be1d15b37a6609bb728641d7b6292e96c303fed392bc7023260263e805b8ea6bcea72e603fa32408d4297f981bc69cfa02a757"

}