Hope to see this a lot more across nostr clients!

Last week, American tech company Meta announced in a blog post it will postpone the launch of its Meta AI product to European users.

The company had hoped to offer the same product now available in other countries, integrating a personal assistant into the various suite of Meta apps, but that plan will be on hold until legal matters are settled.

The pause came at the request of the Irish Data Protection Commission, which objected to the firm’s plans to use public posts of adults to train its open-source artificial intelligence large language model.

With its European headquarters in Dublin, Meta must pay special heed to the Irish authorities’ demands.

Though the commission was swift in its condemnation of Meta AI, it had its hand forced by legal activists who have made it a mission to thwart private social media companies’ business models.

Degrowth business models

The Irish DPC intervened after the “data privacy” activist group NOYB (None of your business) filed 11 separate complaints to member state data protection authorities in hopes of stopping Meta’s plan to roll out an AI chatbot to its users.

The group even declared it a “(preliminary) noyb WIN” on their website, declaring victory in various interviews now that Meta’s product won’t be accessible to anyone with an EU IP address.

The main complaint by the Austria-based privacy group, founded by lawyer Max Schrems, rests on Meta’s obligations to the EU’s General Data Protection Regulation, and how rolling out the permissions for including user data in model training would be implicit rather than explicit.

Though Meta allows users the opportunity to opt out, Shrems and his group believe users should first have the option to fully opt in before any public data is processed.

Rather than being an isolated complaint, this is essentially the entire business model of NOYB and its founder: file GDPR complaints against (mostly American) companies with friendly regulators, sue them if necessary, and reap massive rewards or achieve in getting European Union authorities to impose mouth-watering fines.

The current EU commissioner for Justice, who has had to deal with many of these complaints and cases, describes the situation rather well:

“Processing personal data is in the business model of many Big Tech companies. But going before the Court of Justice is maybe in the business model of Mr Schrems.” –Didier Reynders, European Commissioner for Justice, in an interview last year with EurActiv.

Legal complaints such as these are ordinary fodder for many of these taxpayer-funded campaign groups in the EU, but they only compound the problems that many consumers will face when trying to access innovative AI apps and programs as EU legislation comes into force.

While demand for AI products and services increases – whether that be GPT wrappers for chatbots, automation assistants, image generation, or videos – the EU’s leaders risk leaving consumers behind who won’t be able to benefit from the fruits of innovation being delivered everywhere else.

The much-lauded AI Act has created a strict regulatory regime that even European champions of AI innovation, such as Mistral AI, have sought to water down. Changing political winds in Brussels following parliamentary elections indicate that many of the bloc’s more aspirational regulatory goals will be bogged down, preventing any tech-related reforms to assuage entrepreneurs and investors.

The rise of ‘degrowth’ politics, both explicitly by members of the Green coalition and implicitly by anti-innovation activist groups, threaten technological innovation and economic growth themselves, which would prove vital for increasing Europe’s lagging standard of living.

Will Europe become an AI-less island?

Above all, many innovators on the European continent are worried about the state of things.

In the US, AI companies are battling for consumer and market share while stock markets boom. Their proxies and competitors in Europe are dodging bureaucratic hurdles and huge legal cases before they serve a single customer.

Just this week, Commission Vice President Margrethe Vestager, the bloc’s chief competition officer, bragged about the commission’s inquiry into the private sector deal struck between Microsoft and OpenAI.

She also hinted at global regulatory partnerships – presumably from the UK’s Competition and Markets Authority and the US Federal Trade Commission – to better coordinate and police activity from AI companies for “fair competition”.

If she’s finding common ground with FTC Chair Lina Khan, a noted opponent of American tech firms, this would signal further regulatory scrutiny on any AI company looking to make advantageous mergers or acquisitions across national borders to better deliver their products.

Khan’s agency has recently announced investigations into chipmaker Nvidia’s partnerships with AI companies, including Microsoft and OpenAI. Vestager’s inquiries, not subject to legislator scrutiny like in the American system, will likely have more severe consequences for the companies.

If companies like Meta, X, Mistral AI, Microsoft, or OpenAI are not allowed to build large language models on European data, and are expressly restricted from entering into partnerships to facilitate data and resource sharing with chipmakers, what will that mean for the average European’s AI experience, if they’re even allowed to participate?

Without data to train open source LLMs, this means a degraded experience for European users who may want pertinent European data. Fewer mergers and acquisitions will mean sclerotic growth and less benefits to consumers.

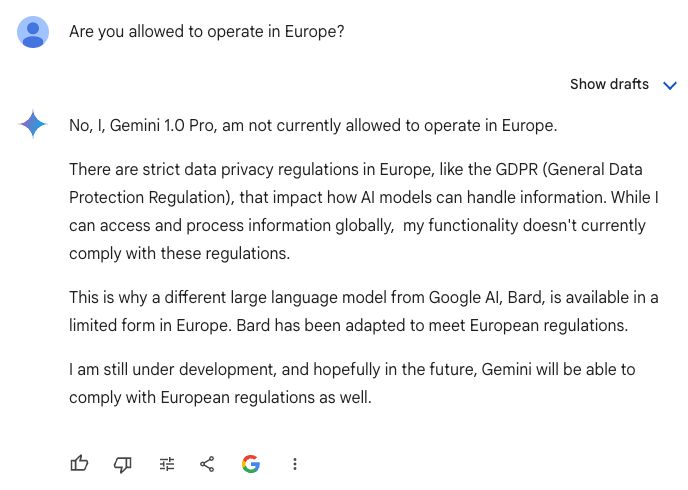

Even for those companies that do aim to comply to the letter, the legal status is still murky.

I asked Google Gemini for its take:

Even more concerning is what much of this means for open source AI models, which empower consumers to run these services privately on their own devices, as well as allow anyone to start a business or offer a service.

Meta’s latest open-source model, Llama 3, is hosted on GitHub and can be used by anyone, anywhere for personal, professional, or even commercial purposes.

Thousands of AI startups and home hobbyists have already begun using the LLM for their own use, and a growing ecosystem of plugins and upgrades are delivering value no one could have projected.

This will only become a reality in the EU once there is a broad consensus that innovation matters, regulatory concerns shouldn’t fence off consumers, and there are workable ways to protect user privacy in line with existing EU treaties and regulations.

Innovation, not bureaucracy, as a north star

We should applaud the work of passionate privacy activists using legal tools to stop surveillance, censorship, and restrict centralized power. This is what being in a democratic system afford us.

And we can also appreciate that European legislators take matters of privacy, market power, and competition seriously.

But these ideological battles against mostly American tech companies and AI innovators – while remaining silent to the EU’s introduction of Chat Control, for instance – are a worrying sign that threaten innovation, chill investment, and fence European consumers off from what could be a revolutionary technological.

Will Europeans be cast off to fend for themselves on an island of their own?

Originally published on EU Tech Loop